Artificial intelligence for intraoperative neuromonitoring: signal interpretation, risk prediction, and clinical translation

Article information

Abstract

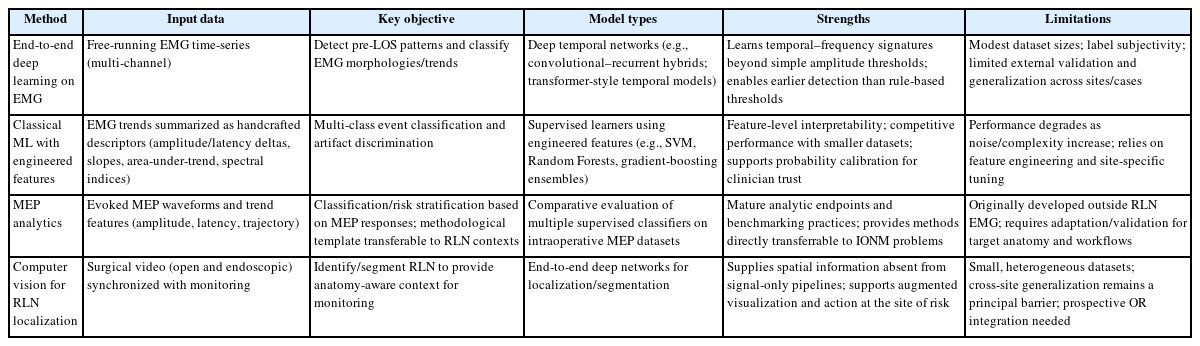

This reviews how artificial intelligence and machine learning reshape intraoperative neuromonitoring for thyroid and head and neck surgery with emphasis on protecting the recurrent laryngeal nerve. We synthesize four methodological strands including end-to-end deep learning on electromyography, classical machine learning with engineered features, motor evoked potential analytics, and computer vision for nerve localization. We map inputs, model classes, and objectives, and compare recurrent laryngeal nerve palsy prediction pipelines that use intraoperative electromyography trend dynamics, registry-based clinical ensembles, and voice spectrogram-derived outcomes. For real-time safety, we contrast threshold-based alerts with machine learning detectors and hybrid systems, and we highlight interpretability, acquisition to alert latency, and robustness to artifacts. Evidence includes prospective evaluations within operating room workflows, yet gaps remain in external validation and generalization across sites. We outline deployment principles that include calibrated graded alerts, standardized visualization, and surgeon-in-the-loop operation aligned with Standard Protocol Items: Recommendations for Interventional Trials–Artificial Intelligence (SPIRIT-AI), the Consolidated Standards of Reporting Trials–Artificial Intelligence (CONSORT-AI), and Developmental and Exploratory Clinical Investigations of DEcision support systems driven by Artificial Intelligence (DECIDE-AI). Together these elements enable earlier and more reliable detection and risk stratification while preserving clinical transparency.

Introduction

Injury to the recurrent laryngeal nerve (RLN) remains one of the most consequential complications of thyroid and head-and-neck surgery, leading to dysphonia, aspiration, and lasting limitations in social participation and quality of life. Over the past three decades, intraoperative neuromonitoring (IONM) has progressed from an optional adjunct to a widely adopted tool that complements visual identification with functional assessments of vagal and RLN conduction. Intermittent monitoring verifies nerve function at predefined stages of the operation: an initial vagal baseline, the first confirmation of the RLN, a reassessment after dissection, and a final vagal check at the end of the case. Continuous monitoring adds automatic periodic stimulation (APS) of the vagus nerve at a low frequency to provide near real-time trends in amplitude and latency, allowing recognition of evolving neuropraxia before a clear loss of signal (LOS) occurs. In 2018, the International Neural Monitoring Study Group (INMSG) issued evidence-based guidelines that standardized definitions, outlined troubleshooting pathways, and set forth a staging algorithm for bilateral procedures when early LOS is detected on the first side [1]. These normative documents catalyzed outcome-oriented research on how trend dynamics relate to postoperative vocal fold mobility and how monitoring should inform real-time surgical decisions [2].

A second, equally powerful trend is the rise of high-frequency, long-duration perioperative data streams free-running laryngeal electromyography (EMG), evoked responses including motor evoked potentials (MEP), and increasingly synchronized surgical video [3]. Manual interpretation of these data is cognitively demanding, prone to false positives from endotracheal tube malrotation, loss of electrode–mucosa contact, electrocautery interference, or residual neuromuscular blockade, and marked by inter-operator variability. Against this backdrop, machine learning (ML) and deep learning provide pattern-recognition capabilities that denoise and classify EMG/MEP waveforms, detect pre-LOS deterioration earlier than static thresholds, and calibrate risk estimates to guide actions such as traction release or staging [3-5]. Complementary computer-vision models can identify the RLN and related anatomy in surgical video, supplying spatial context for signal-based alerts. We conducted a narrative review of peer-reviewed English-language studies published 2015–2025 by searching PubMed, Embase, and Scopus with predefined terms (IONM, RLN, EMG, M EP, LOS, ML), using dual independent screening and data extraction. This review synthesizes the literature on artificial intelligence and machine learning (AI/ML) for IONM signal interpretation, surveys predictive models for RLN palsy, and proposes a practical roadmap for clinical integration aligned with contemporary AI trial-reporting guidance, including the Standard Protocol Items: Recommendations for Interventional Trials–Artificial Intelligence (SPIRIT-AI), the Consolidated Standards of Reporting Trials–Artificial Intelligence (CONSORT-AI), and Developmental and Exploratory Clinical Investigations of DEcision support systems driven by Artificial Intelligence (DECIDE-AI) [6,7].

Background: Fundamentals of Intraoperative Neuromonitoring and Current Limitations

From a physiological and workflow perspective, IONM employs endotracheal surface electrodes to record compound muscle action potentials from the intrinsic laryngeal musculature during stimulation of the vagus nerve or the RLN. In intermittent monitoring, nerve function is confirmed at predefined junctures an initial vagal baseline, the first identification of the RLN, a reassessment after dissection, and a final vagal check at the end of the case. Continuous monitoring introduces APS of the vagus at approximately 1–3 Hz and yields near-continuous trends in amplitude and latency [1]. Within this framework, the INMSG defines LOS as an EMG amplitude below 100 μV under standardized stimulation; segmental, or type 1, patterns indicate focal conduction block, whereas global, or type 2, patterns suggest proximal or technical causes, distinctions with clear prognostic and management implications [8,9].

Injury dynamics and the severe combined event (sCE) are now well characterized. Prospective studies using continuous neuromonitoring show that traction-related neuropraxia typically appears first as a progressive reduction in amplitude accompanied by an increase in latency before hard LOS. A composite criterion known as the sCE, defined by a reduction in amplitude of at least 50% together with an increase in latency of at least 10% relative to baseline, has been linked to early postoperative vocal fold immobility when corrective action is delayed, and recovery of the signal is associated with favorable early mobility [10,11]. These observations anchor both real-time troubleshooting and quantitative endpoints for predictive modeling [2,12].

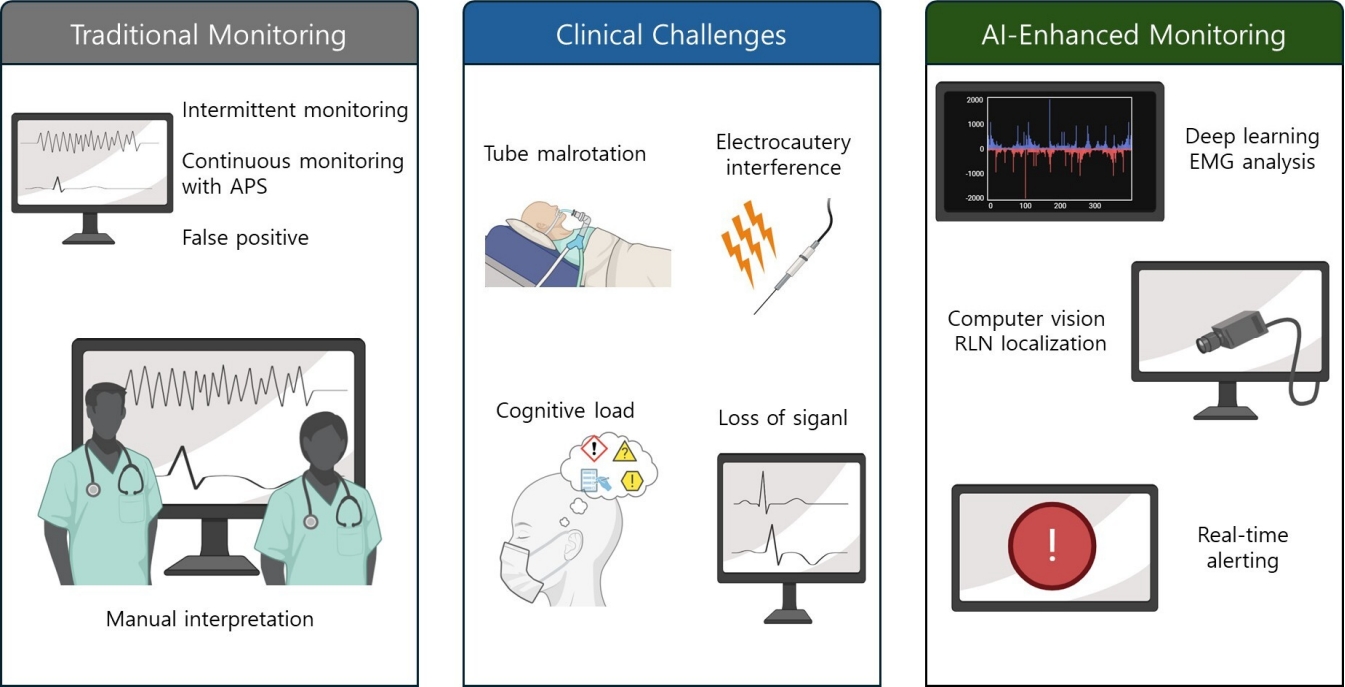

Key limitations persist despite standardization. Monitoring remains vulnerable to artifacts arising from endotracheal tube displacement or malrotation, pooled secretions, inadequate electrode–mucosa contact, and interference from electrocautery, and it is further constrained by device heterogeneity in filtering and gain and by inconsistent thresholds across centers. Even with continuous neuromonitoring, subtle pre-LOS patterns may be overlooked and technical artifacts may be misclassified as neurogenic change, which argues for automated, robust, and interpretable analytics that operate at the cadence of APS and provide clear, actionable why-now explanations [3,13,14]. Conventional IONM remains vulnerable to tube malposition, electrocautery artifacts, and cognitive load at the console. To motivate an augmented approach, Figure 1 contrasts legacy monitoring, prevalent clinical failure modes, and an AI-enhanced workflow that integrates analytics, synchronized video, and graded alerts.

From traditional monitoring to AI-enhanced IONM: a visual overview. Conventional console-based monitoring centered on EMG/MEP traces (left). Common clinical challenges that erode reliability, including tube malposition, electrocautery artifacts, ambiguous or evolving signals, and cognitive load (middle). AI-enhanced monitoring that couples analytics on trends, synchronized surgical video, and graded alerts to support earlier and more actionable decisions (right). APS, automatic periodic stimulation; EMG, electromyography; RLN, recurrent laryngeal nerve; MEP, motor evoked potential; AI, artificial intelligence.

Artificial Intelligence and Machine Learning in Intraoperative Neuromonitoring Signal Interpretation

1. End-to-end deep learning on electromyography

Regarding objectives and data, EMG-centric investigations typically aim to classify EMG morphologies, to detect pre-LOS trends in streaming signals, and to suppress artifacts. One representative end-to-end approach used a hybrid of convolutional and recurrent layers trained on free-running EMG segments collected during thyroidectomy and achieved cross-patient accuracies near or above 0.85 with sensitivities of 0.90 or higher for abnormal morphologies, and in carefully curated long streams reported sensitivity and specificity exceeding 0.97 under stringent artifact thresholds [15]. Although sample sizes and labeling protocols vary, these experiments prove feasibility for automatic triage of long EMG sequences and lay groundwork for prospective always-on alerting [3,16].

With respect to strengths and gaps, deep temporal models such as convolutional–recurrent hybrids and emerging transformers can learn discriminative time and frequency signatures that surpass simple amplitude thresholds. Nonetheless, available datasets are modest in size, labeling is vulnerable to variability between raters, and external validation is limited. Only a small number of studies report end-to-end system latency in the operating room, a metric that is essential for establishing feasibility for intraoperative decision support [17].

2. Classical machine learning with engineered features

An alternative strategy engineers interpretable descriptors, amplitude/latency deltas, slopes, area-under-trend, spectral entropy, that are then fed to support vector machines, Random Forests, or gradient-boosting ensembles. In broader IONM contexts, classical ML has proven competitive on multi-class tasks under careful normalization and site-specific calibration, while offering transparent feature importance for clinician trust [16]. Yet, as complexity and noise increase, purely feature-engineered systems may underperform deep learning unless regularly adapted to local device characteristics [18,19].

3. Motor evoked potentials analytics as a transferable paradigm

Although most MEP analytics derive from neurosurgical and spinal IONM, their methodological contributions are directly transferable. In a 2024 multicenter study in Computers in Biology and Medicine, Boaro et al. [20] trained five classifiers on an intraoperative MEP database assembled across six centers and compared model performance with human experts; ML attained expert-level muscle classification on held-out patients, illustrating that supervised models can scale to complex intraoperative signals with center-level heterogeneity. Follow-on bicentric work emphasized explainability, using feature attribution to highlight signal components driving classifications, an essential ingredient for surgeon acceptance [21].

4. Computer vision to localize the recurrent laryngeal nerve in surgical video

Computer-vision analysis provides the spatial context that signal-only systems lack. Gong et al. [4] developed an end-to-end deep network that identifies and segments the RLN during open thyroidectomy. The model achieved quantitative segmentation performance sufficient to support augmented visualization. More recent endoscopic cohorts show similar feasibility for nerve recognition, which suggests that EMG-based alerts can be fused with anatomy-aware overlays to guide traction release or energy device use at the precise site of risk [4].

Taken together, evidence across EMG, MEP, and surgical video points to a consistent pattern. To consolidate Sections 3.1–3.4, Table 1 contrasts inputs, objectives, representative models, strengths, and limitations across four IONM strategies. Deep Considered together, evidence across EMG, MEP, and surgical video points to a consistent pattern. Deep temporal models generally surpass static thresholding when they encounter complex morphologies. For clinical adoption, systems must provide calibrated uncertainty and clear explanations in the operating room, and strong cross-site generalization remains the principal barrier to translation. A practical near-term strategy is a hybrid workflow that preserves sCE and loss-of-signal rules for safety and interpretability while using ML to filter artifacts, prioritize risk, and detect earlier trend inflections [16,22].

Recurrent Laryngeal Nerve Palsy Prediction Models

1. Inputs, architectures, and datasets

Predictors of postoperative RLN dysfunction fall into signal-anchored features and patient/procedural covariates [10]. Signal-anchored features include LOS type and timing, end-of-case amplitudes (R2/V2), relative amplitude drop, latency rise, and intraoperative signal recovery (ISR) after LOS. Classical multivariable logistic regression and receiver operating characteristic-derived thresholds have dominated to date, though registry-scale studies have introduced ensembles (Super Learner, XGBoost) for composite outcomes [2]. DL is beginning to learn directly from raw EMG and associated perioperative data streams but has not yet produced multicenter, prospective, in-loop evaluations in thyroid surgery [3,23].

2. Signal-anchored prognostics with prospective evidence

Prospective evidence provides the foundation for most intraoperative decision rules. In a continuous monitoring cohort of 785 patients, Schneider et al. [2,10] described the dynamics of signal loss and recovery and linked type 1 and type 2 patterns to early vocal fold mobility. This study then defined actionable ISR thresholds, showing that amplitude recovery around the 50 percent level after signal loss was associated with normal early vocal fold function, whereas absent or minimal recovery was associated with transient palsy. These cutoffs, derived from receiver operating characteristic analyses, translate into practical go or no-go decisions for staging and supply labeled outcomes for training ML classifiers [2,10].

3. Registry-based ensembles for complications (including recurrent laryngeal nerve injury)

Analyses of American College of Surgeons National Surgical Quality Improvement Program thyroidectomy–targeted data indicate that ensemble learning provides modest gains over logistic regression for predicting postoperative complications, including RLN injury and cervical hematoma. The absence of waveform inputs and the post hoc character of registry modeling limit usefulness during the operation. These observations support the development of integrated systems that combine patient-level risk with streaming EMG to deliver timely, individualized estimates that can influence intraoperative decisions [3,16].

4. Voice-centric outcomes beyond binary palsy

Beyond frank palsy, voice quality is a patient-salient endpoint. A 2022 study in Sensors trained a deep network on pre- and early postoperative voice spectrograms to predict 3-month GRBAS scores after thyroid surgery, achieving promising discrimination. Such work suggests a future in which intraoperative risk estimates are linked to longitudinal functional outcomes, enabling targeted rehabilitation or nerve-reinnervation strategies even when laryngoscopy is normal [24].

Regarding validation status and remaining gaps, most palsy-prediction studies are retrospective and single-center. Even when thresholds are derived prospectively from intraoperative signals, they often require recalibration across devices and anesthetic regimens. Only a small subset of models reports real-time metrics that are essential for intraoperative use, including time to alert, false-alarm burden per hour, and measurable decision impact [5,6]. Meaningful progress will require multicenter, surgeon-in-the-loop evaluations that adhere to contemporary AI trial-reporting standards [7].

Automated Loss of Signal Detection and Real-Time Alerts

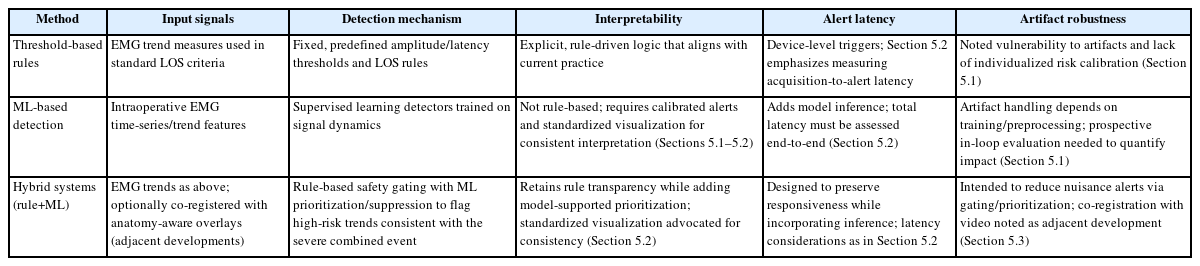

1. Detection paradigms: thresholds, machine learning, and hybrids

Threshold-based rules remain the foundation of current practice. Amplitude and latency thresholds derived from physiology and from prospective cohorts, including definitions of LOS and the sCE, are simple to apply, easy to interpret, and generally agnostic to device design. They are also aligned with INMSG recommendations for troubleshooting and for staging bilateral procedures. Their limitations are vulnerability to artifacts and a lack of individualized risk calibration [1,10].

ML–based detectors offer a complementary pathway. Learned classifiers analyze windows of EMG and estimate the probability of irritation or impending LOS while incorporating methods for artifact suppression, and early studies report high discrimination for abnormal morphologies [18]. In parallel, supervised pipelines for intraoperative MEP classification have reached expert-level accuracy across multiple centers, which supports the feasibility of robust real-time inference when datasets and preprocessing and postprocessing are carefully designed. What remains absent in thyroid surgery is a prospective and continuous deployment that operates in an always on mode with explicit measurement of latency and with analysis of its impact on intraoperative decisions [20].

A practical approach in the near term is a hybrid workflow that preserves established rules as a safety net and uses ML to increase sensitivity and to reject artifacts. Such systems can present confidence-gated alerts with graded urgency, for example, a caution level that signals an irritation pattern and a warning level that signals a high-risk trend consistent with the sCE [17,25].

2. Interfaces, latency, and reliability

Real-time clinical value depends on the total latency from acquisition to alert, including the sampling interval for APS, typically 1 to 3 Hz, along with preprocessing, postprocessing, model inference, and the time required for display and acknowledgement. Systems intended for intraoperative use should add less than 1 second of computational and display delay beyond the sampling cadence, present clear reasons for each alert through trend overlays, salient time–frequency views, or synchronized video frames, and incorporate structured troubleshooting for common artifacts such as tube rotation, inadequate contact, or excessive neuromuscular blockade [14,26]. Evidence from clinical waveform studies further underscores the importance of rigorous signal conditioning and amplitude normalization to ensure consistent interpretation across patients and cases [13]. To translate the above design choices into practice, Table 2 contrasts threshold-based, ML-based, and hybrid alerting with respect to inputs, detection logic, interpretability, acquisition-to-alert latency, and artifact robustness.

3. Evidence base and adjacent developments

Guidelines from the INMSG, together with multicenter outcome studies, provide the strongest clinical foundation for real-time alerting, and meta-analytic work has evaluated the impact of IONM on outcomes at scale [3]. In parallel, computer-vision methods can segment the RLN in open and endoscopic thyroidectomy, which makes it feasible to localize risk in space and to co-register EMG alerts with anatomy-aware overlays [3,4].

4. Regulatory and safety perspectives

Although vendor materials describe device features, peer-reviewed analyses of AI/ML medical devices emphasize lifecycle evidence, generalizability, and post-deployment surveillance. Benjamens et al. [27] cataloged US Food & Drugs Administration-cleared AI/ML devices and underscored transparency. Fraser et al. [28] synthesized regulatory expectations for health-software and AI, highlighting software lifecycle (IEC 62304), risk management (ISO 14971), usability (IEC 62366-1), and the need for change-control in adaptive models. These frameworks translate directly to AI-enabled IONM modules [28].

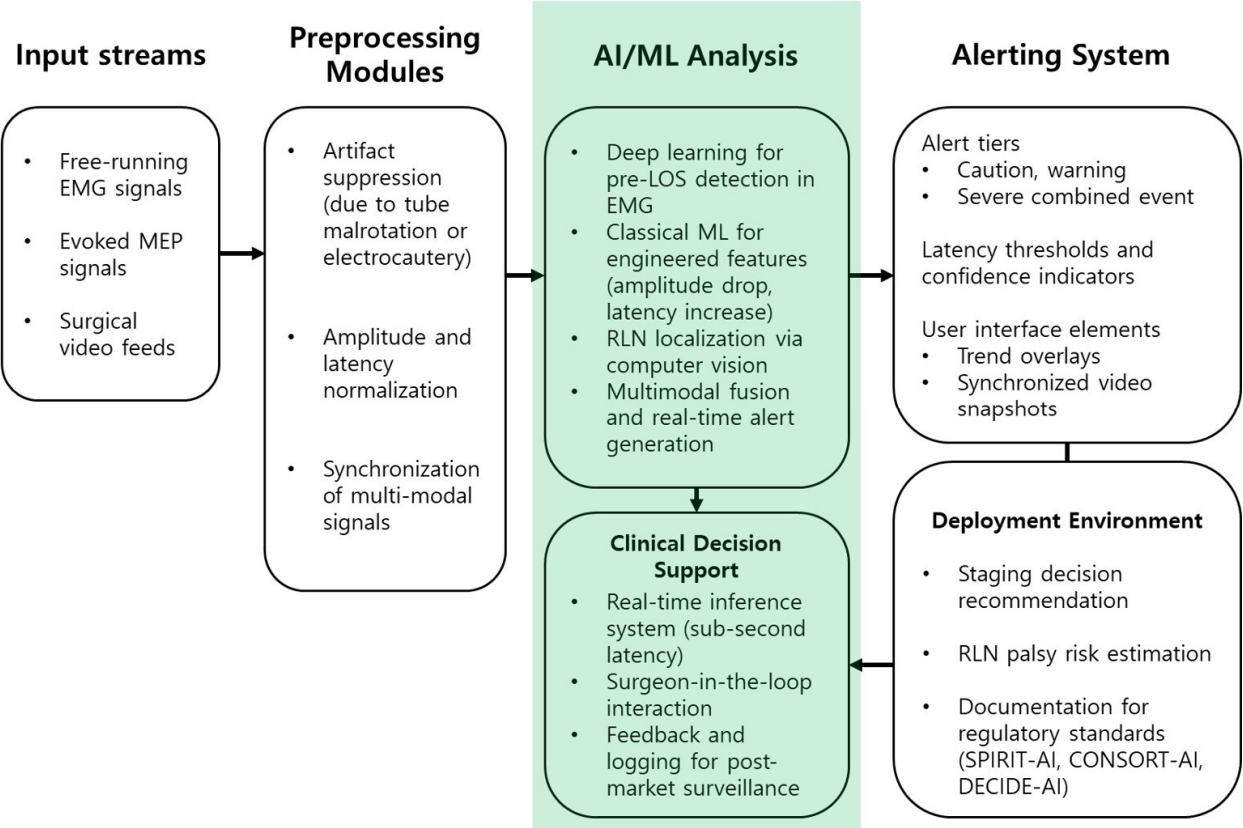

Clinical Translation and Integration Roadmap

1. Barriers to adoption

Data governance and privacy are foundational to clinical translation. Generalizable models require multicenter repositories of waveforms and video with harmonized labels and rich metadata that specify device characteristics, anesthetic regimen, and electrode placement. Building such resources depends on robust consent processes, rigorous de-identification, and institutional oversight that explicitly permits secondary use for algorithm development and external validation [27].

Regulatory approval demands a full software-asa-medical-device pathway. AI-enabled detectors of LOS and risk estimators fall within this category and must satisfy evidence standards across the product lifecycle. Peer-reviewed regulatory syntheses emphasize clear requirements and hazard analysis, thorough verification and validation, usability engineering, cybersecurity controls, and post-market surveillance, together with transparent documentation of data lineage, model versioning, and processes for change management after deployment [20].

Surgeon trust and workflow fit determine real-world adoption. Human-factors studies in surgical AI show that acceptance depends on calibrated alerts, explanations that can be understood at a glance, and reliable suppression of artifact-driven false positives. Alarm fatigue undermines confidence. For neuromonitoring, the interface should display the rationale for each alert, present confidence intervals, and link every alert tier to a concrete troubleshooting action that aligns with established guideline algorithms [18].

2. Validation requirements: from offline accuracy to in-the-loop impact

Prospective evaluation and reporting standards are essential for moving from retrospective accuracy to clinical utility. The CONSORT-AI extension defines reporting items for randomized trials of AI interventions, SPIRIT-AI sets protocol expectations, and DECIDE-AI outlines requirements for early live clinical evaluations with attention to human-AI interaction, error analysis, usability, and context of use [5,6]. For IONM, studies should report not only area under the curve (AUC), sensitivity, specificity, and calibration but also time to alert, false-alarm rate per hour, and the impact on surgical decisions, together with patient-centered outcomes that include short-term vocal fold mobility and voice quality and quality of life at 3 to 12 months [5].

3. User interface and alerting principles

Design priorities focus on graded alerts that are tied to explicit actions, clear explainability through salient EMG windows and amplitude and latency overlays referenced to baseline, and synchronized video that makes the anatomic locus of risk visible. Interfaces should also present uncertainty estimates and incorporate robust artifact detection, including recognition of tube rotation signatures, to limit false positives, while maintaining workflow-aware ergonomics with minimal additional hardware and a small footprint on existing consoles [13]. Evidence from intraoperative signal analytics and voice outcome studies further indicates that consistent signal conditioning and standardized visualization improve interpretability and facilitate adoption [24].

4. Multidisciplinary collaboration

Effective translation requires a joint governance model that brings together endocrine and otolaryngology surgeons who provide clinical leadership and adjudicate labels, neuromonitoring specialists and anesthesiologists who define protocols, artifact taxonomies and APS parameters, data scientists and engineers who manage modeling, machine learning operations, and drift monitoring, human-factors experts who conduct usability testing, and regulatory teams who ensure compliance with software-as-a-medical-device requirements. Editorials and guidance across the AI trial literature emphasize multi-stakeholder design from protocol development through post-market surveillance [5].

5. Step-by-step roadmap

An effective implementation pathway begins by defining the clinical decision and the target operating points. Specify how the system should change behavior by setting explicit sCE probability thresholds within a defined time window and by recommending staging when the ISR probability remains below a prespecified level after LOS, and pre-register primary and secondary outcomes [2].

Standardization of acquisition and labeling is essential. Harmonize sampling rates, filtering, APS frequency, and event ontologies such as loss-of-signal types, sCEs, and artifact classes, and establish multirater adjudication with rigorous quality control [2].

Model development should follow rigorous ML practice by using cross-site splits with site-held-out testing. Report calibration curves and decision-curve analyses and quantify subgroup performance. For time-series models include explicit latency budgets, and for artifact robustness include adversarial tests [18].

Human-factors engineering is required throughout. Prototype the interface and alerts with surgeons and technologists, run simulated cases and cognitive walkthroughs, and iterate to minimize alert fatigue while maintaining adequate sensitivity.

Before clinical use, conduct shadow-mode evaluation by prospectively running the model in parallel with standard care without influencing decisions, and measure time to alert, false-alarm rate, disagreement with human interpretation, and system reliability including uptime and missed data [5].

Subsequently, undertake a controlled pilot that enables surgeon-in-the-loop use with predefined stopping rules and independent safety monitoring, and report methods and results in accordance with SPIRIT-AI and CONSORT-AI [5,6].

Finally, plan scale-out and surveillance with post-market monitoring for performance drift, recalibration when devices or anesthesia change, equity checks across subgroups, and transparent periodic reporting that aligns with contemporary reviews of medical-AI deployment [28]. Figure 2 provides a unifying system view that we reference throughout this section when discussing governance, validation, user interface, and staged deployment.

Architecture and workflow of AI-enabled IONM. Multimodal inputs (free-running EMG, evoked MEP, synchronized surgical video) undergo artifact suppression, amplitude/latency normalization, and cross-modal synchronization before learning-based inference (deep temporal models for pre-LOS detection, classical ML on engineered features, computer-vision-based RLN localization, multimodal fusion). Outputs include graded alerts with confidence, surgeon-facing trend overlays and video snapshots, and decision-support functions (staging recommendation, RLN palsy risk estimation) aligned with SPIRIT-AI/CONSORT-AI/DECIDE-AI documentation. AI, artificial intelligence; ML, machine learning; EMG, electromyography; MEP, motor evoked potential; LOS, loss of signal; RLN, recurrent laryngeal nerve; SPIRIT-AI, Standard Protocol Items: Recommendations for Interventional Trials–Artificial Intelligence; CONSORT-AI, Consolidated Standards of Reporting Trials–Artificial Intelligence; DECIDE-AI, Developmental and Exploratory Clinical Investigations of DEcision support systems driven by Artificial Intelligence; IONM, intraoperative neuromonitoring.

Conclusion and Future Directions

The arc of evidence suggests that AI/ML can shift IONM from reactive thresholding to proactive, context-aware guidance. On the signal-processing axis, deep temporal models recognize pre-LOS morphologies more sensitively than rule-based heuristics; on the prognostics axis, signal-anchored thresholds calibrated in multicenter cohorts already inform staging decisions and offer strong supervision for ML [3,10]. On the spatial axis, computer-vision models can identify the RLN and adjacent anatomy in surgical video, creating the possibility of anatomy-aware overlays that co-register with EMG risk scores to pinpoint where mitigation is needed. While aggregate meta-analyses continue to debate the overall effect of IONM on palsy rates at the population level, the combination of standardized continuous IONM (c-IONM) trend criteria, prospective outcome correlates, and emerging AI pipelines provides a credible path to earlier warnings, fewer unnecessary alerts, and more consistent intraoperative decisions [2]. The remaining hurdles are not purely technical: generalizable datasets, prospective in-loop evaluations with meaningful endpoints, human-factors-driven interfaces, and software as a medical device-compliant governance will determine whether AI-assisted IONM improves real-world outcomes [4].

1. Priority directions for the next decade

1) Standardize data and evaluation

Adopt shared schemas for EMG/MEP and synchronized video, including device metadata and artifact taxonomies; report real-time metrics (time-to-alert, false-alarm rate/hour) alongside AUC and calibration. Benchmarks should require external, site-held-out validation to stress generalization [1,13].

2) Build open, multicenter annotated repositories

De-identified waveform repositories with synchronized stimulation logs and adjudicated event labels, LOS type, sCE onset/offset, artifact episodes, are prerequisites for robust training and comparison. Clinical series in c-IONM show that rich labels exist and can be operationalized [10,20].

3) Fuse modalities and context

Combine EMG-based risk with anatomy-aware video segmentation to contextualize alerts at the precise dissection site; integrate patient factors to individualize thresholds [4]. Initial feasibility in open and endoscopic thyroidectomy supports a near-term translational program for multimodal fusion [29].

4) Prospective, surgeon-in-the-loop trials with longitudinal endpoints

Move beyond offline accuracy to measure time-to-mitigation, staging decisions, RLN palsy incidence, and 3- to 12-month voice quality and quality-of-life outcomes; report per SPIRIT-AI, CONSORTAI, and DECIDE-AI [30].

5) Deployment engineering and governance

Engineer for sub-second inference beyond APS cadence; implement uncertainty calibration and artifact gating; and align development with evolving regulatory expectations for medical-AI devices (software lifecycle, risk management, usability, and change control) [27]. Published regulatory reviews provide the necessary scaffolding for safe scale-out [28,31].

If realized, these steps could transform IONM into a predictive safety layer that consistently recognizes danger earlier, explains its recommendations clearly, and measurably reduces RLN injury while preserving surgical efficiency. The opportunity is not merely to automate threshold application, but to elevate monitoring into a multimodal, human-centered system that helps teams make better decisions under uncertainty.

Notes

Funding

None.

Conflict of Interest

Seung Hoon Woo is the Editor-in-Chief of the journal, but was not involved in the review process of this manuscript. Otherwise, there is no conflict of interest to declare.

Data Availability

None.

Author Contributions

Conceptualization: YSL; Data curation: YSL; Formal analysis: YSL; Investigation: YSL; Methodology: YSL; Project administration: SHW; Software: YSL; Visualization: YSL; Writing–original draft: YSL; Writing–review & editing: all authors.